As the year 2025 is coming to an end and we look back at the unique content that we analysed through the year, the string of videos that surfaced in the wake of the military escalations between India and Pakistan in May stand out.

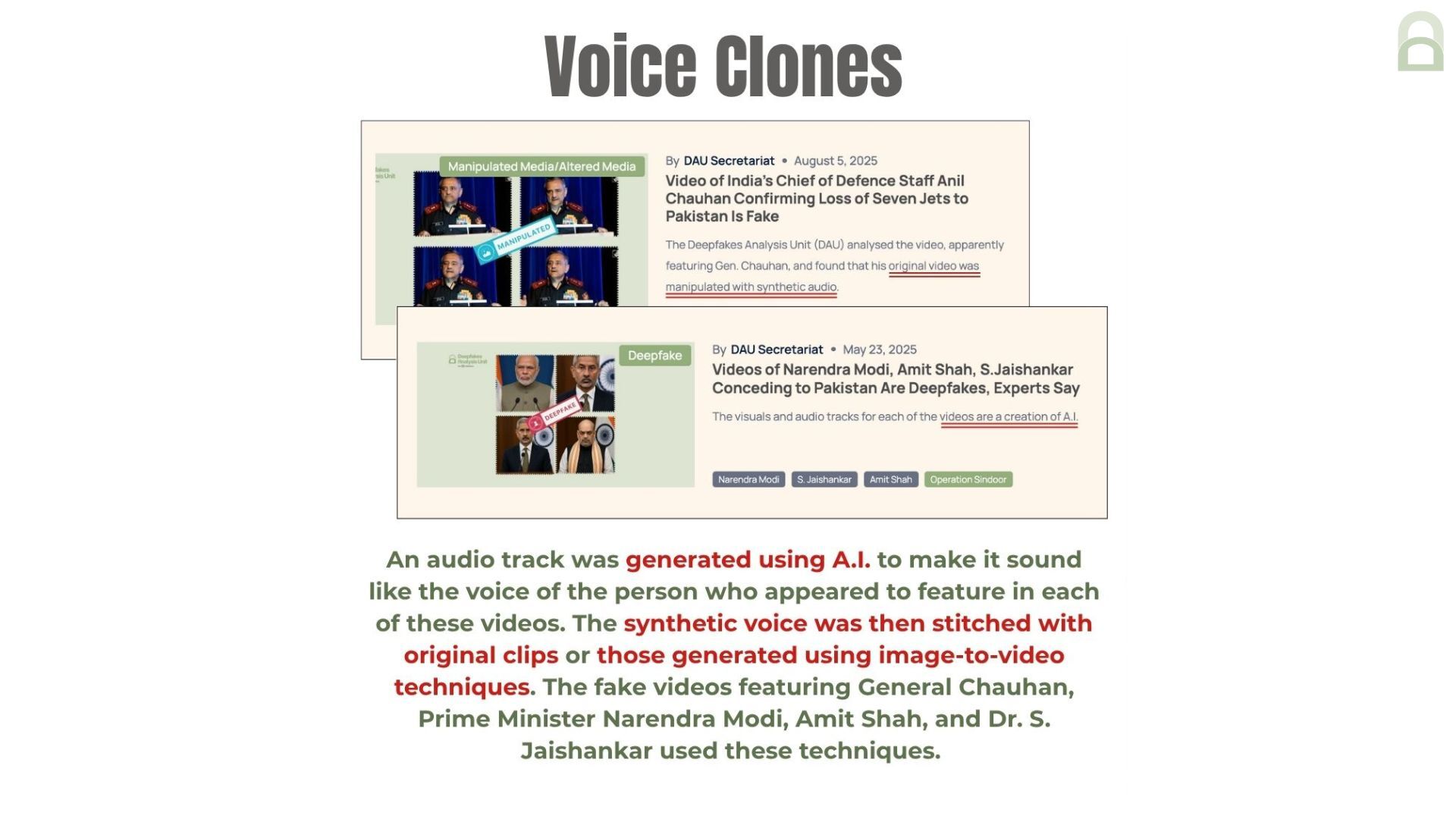

The individuals targeted through those videos have been senior Indian defence officials such as chief of the army, air force, and navy as well as top Indian ministers. Their visuals were used with fully or partially A.I.-generated audio tracks to spin narratives about India’s supposed loss of combat equipment and personnel to Pakistan, or India conceding to Pakistan during what was popularly known as “Operation Sindoor”.

Amidst the heightened tensions between the two nuclear-armed neighbours, social media platforms in India became a theatre of a virtual war being fought through the misuse of generative A.I.

Statements that were never made by representatives of the Indian government were being misattributed to them. What’s new? Blurring of lines between synthetic and authentic content; the threat of such falsehoods, misinformation, and targeted disinformation accentuating the tensions in real time at an unprecedented speed.

In the videos we debunked, similar visual and audio manipulation techniques were used.

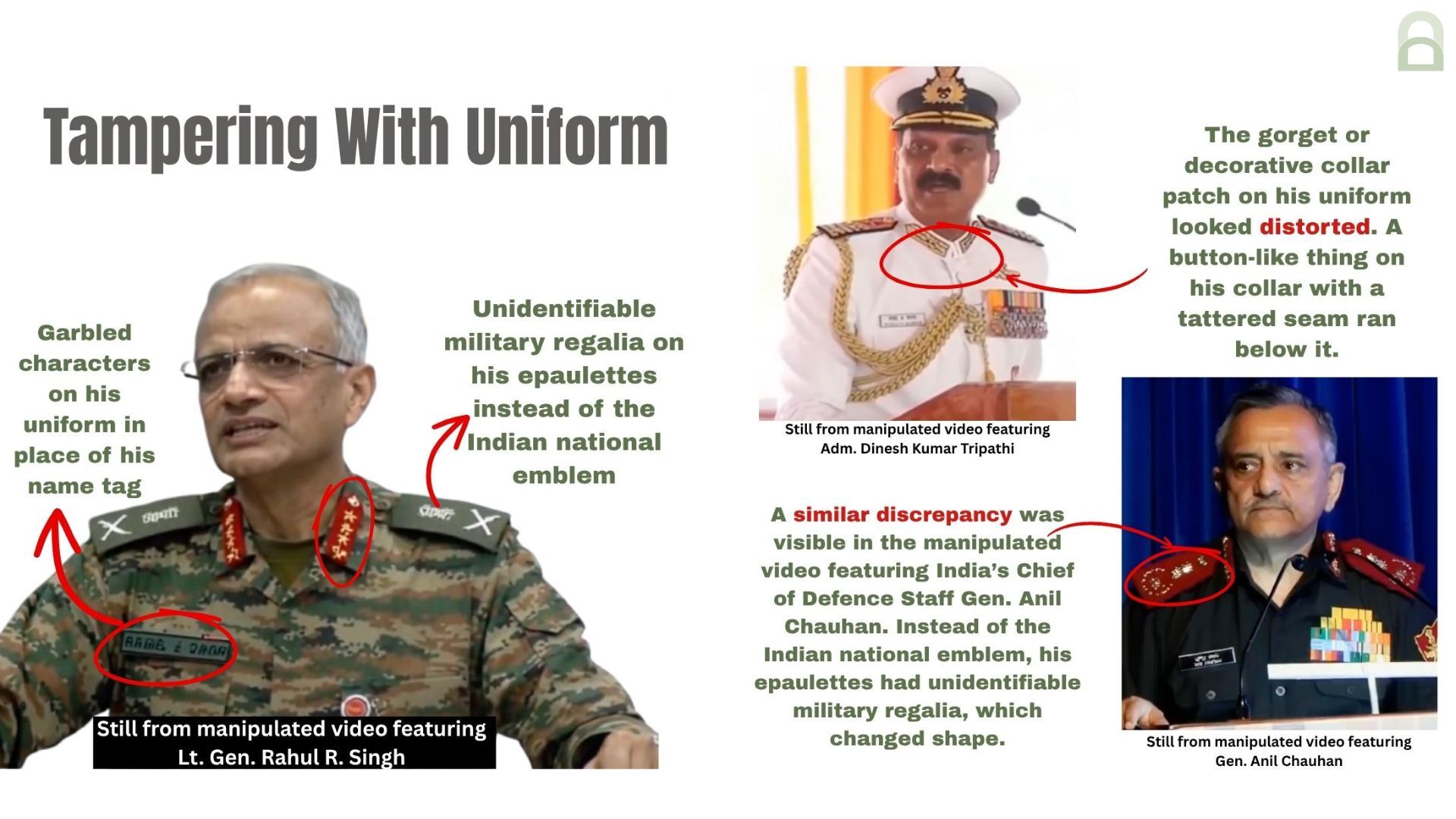

In the videos that apparently featured defence officials they were seen in uniform, giving a speech or making a statement. The insignia on their uniform and their name tags were manipulated in most cases.

In multiple videos the body movements of the subjects featured did not match those in the corresponding source videos. Our partners from RIT’s DeFake Project pointed to generation techniques that were likely used in some of these videos such as image-to-video generation, which involved the use of screenshots from a source video to generate video clips that were similar to the source video.

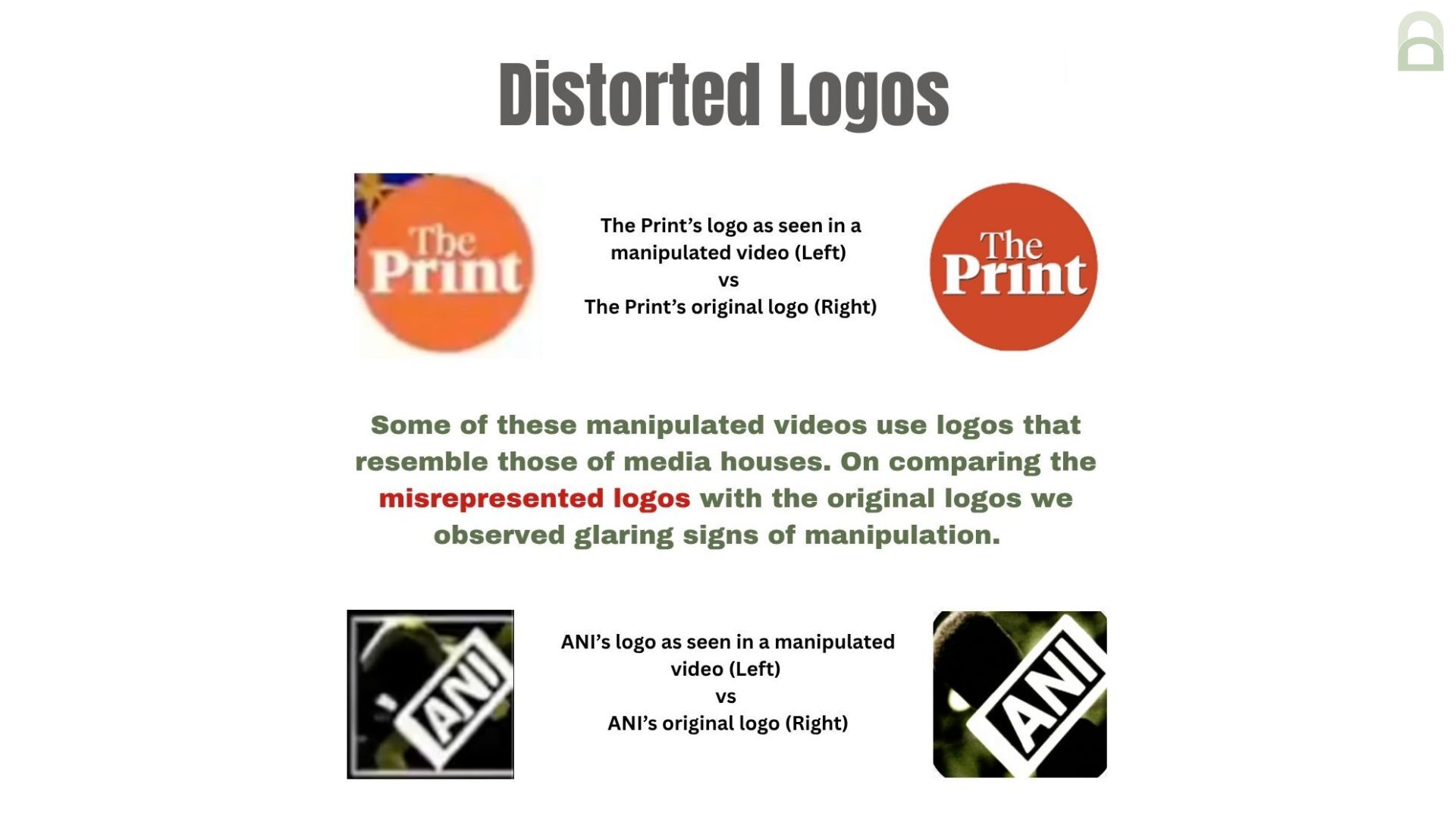

Another running theme in these videos was the use of distorted logos of media houses. The logos looked similar but were not identical, the discrepancies included different font sizes or typefaces.

There was more to these videos than recurring visual cues that pointed to manipulation. Our observation and expert analyses also helped us assess the trends in the audio tracks in the videos. Most videos used voice clones or synthetic voices that sounded somewhat similar to the people apparently featured in the videos.

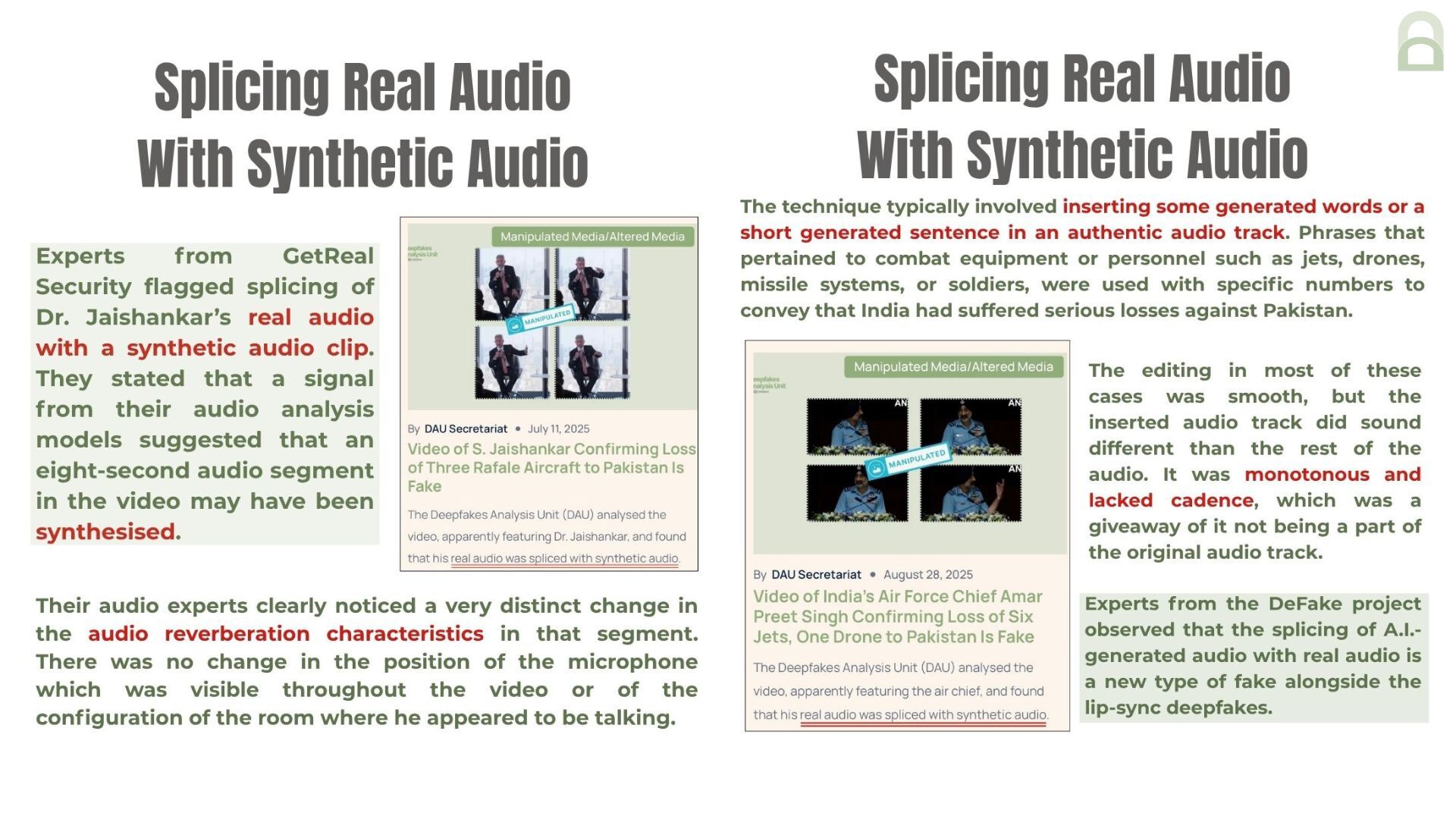

In some cases some words that were part of the original audio track were kept in the synthetic audios. We also saw a few examples of audio splicing, which involved stitching together parts of the original audio with the synthetic audio tracks.

In the course of our analysis, we came across only a handful of deepfakes which we debunked, one of those was a video apparently featuring Prime Minister Narendra Modi. You can read more about that and all the other reports on “Operation Sindoor” here.

(Written by Debopriya Bhattacharya and Debraj Sarkar, edited by Pamposh Raina.)

Kindly Note: The manipulated audio/video files escalated to us for analysis are not embedded here because we do not intend to contribute to their virality.

If you are an IFCN signatory and would like for us to verify harmful or misleading audio and video content that you suspect is A.I.-generated or manipulated using A.I., send it our way for analysis using this form.