The Deepfakes Analysis Unit (DAU) analysed a video featuring a host of Indian celebrities, apparently endorsing a gaming application launched by Mukesh Ambani, chairperson of Reliance Industries. After putting the video through A.I. detection tools and getting our expert partners to weigh in, we were able to conclude that A.I.-generated audio was used to fabricate the video.

The 56-second video in Hindi, embedded in a Facebook post, was sent to the DAU by a fact-checking partner for analysis.

The video opens with visuals of Mukesh Ambani seemingly speaking at a public event. It is followed by a clip of his son Anant, also a business person associated with Reliance Industries and its philanthropic arm. Indian cricketer Virat Kohli and Bollywood actor Hrithik Roshan feature in the other two clips seen in the video.

Graphics indicating multiplier returns from the app flash in some parts of the video while instrumental music plays throughout in the background.

The male voice with Mukesh Ambani’s visuals announces an investment opportunity guaranteeing profitable returns to thousands of Indians. The distinct male voice with each of the other three subjects urges viewers to invest in a gaming app and achieve quick financial rewards. The voices linked to the men sound somewhat similar to their natural voices, however, the differences are noticeable on close observation.

In the clip of Mukesh Ambani the camera is zoomed in on his face. The microphone seems to oddly disappear into his lower jawline as he speaks, and in some bits his mouth and teeth seem to be quivering. His lip-sync is imperfect, and his voice sounds nasal and robotic.

Anant Ambani appears to be speaking into a handheld microphone bearing a logo resembling that of the news agency ANI. His lip-sync is mostly consonant, however, there is a strange quivering around his lips.

The audio for Mr. Kohli and Mr. Roshan is not in sync with their visuals, their voices lack inflection, making their speeches sound scripted. A logo resembling that of Royal Challengers Bengaluru, the Bengaluru-based cricket franchise of the Indian Premier League (IPL), appears at the top right corner of the frames featuring Kohli.

The video ends with a male voiceover, sounding similar to the one attributed to Kohli, asking viewers to download the gaming app using a link that is not visible in the video.

We undertook a reverse image search using screenshots from the video under review to find the origin of the various clips interspersed in it.

Mukesh Ambani’s clip could be traced to his address at a summit; the video of the same was published on January 10, 2024 from the official YouTube channel of Business Standard, a business daily.

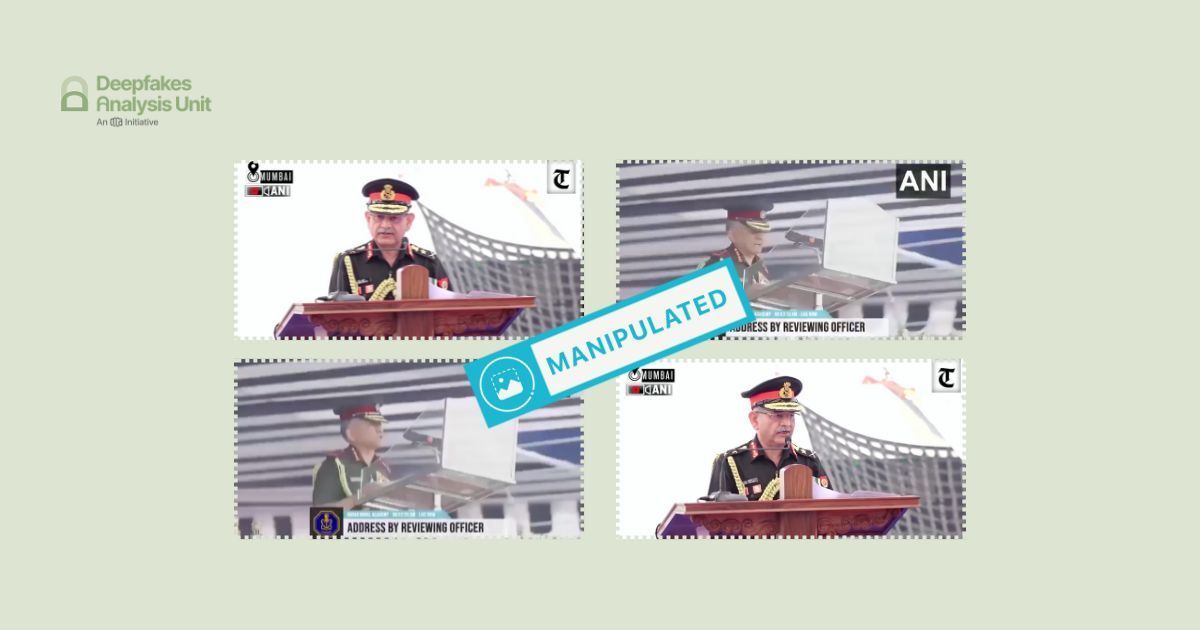

A 15-second clip from this video featuring Anant Ambani, published on February 26, 2024 from the official YouTube channel of ANI News, has been lifted and used in the fabricated video.

Kohli’s visuals could be traced to a podcast episode published on Feb. 25, 2023 from the official YouTube channel of Royal Challengers Bengaluru. Roshan’s clip has been lifted from this interview published on January 29, 2024 from the official YouTube channel of Film Companion Studios, a platform focussed on storytelling about films and entertainment.

The backdrop, clothing, and body language of each of the subjects is identical in the original clips as well as the doctored clips. However, none of them say anything about any kind of money-making app in the original clips. Anant Ambani’s original video is in Hindi but the rest of them spoke in English in the original videos. None of the graphics seen in the doctored video appear in any of the original videos.

To discern if A.I. had been used to manipulate the video, we put it through A.I. detection tools.

Hive AI’s deepfake video detection tool did not detect any manipulation using A.I. in the visuals, however, their audio tool indicated tampering using A.I. in portions toward the end of the audio track; those portions featured visuals of Kohli and Roshan.

We also ran the video through our partner TrueMedia’s deepfake detector which suggested substantial evidence of manipulation in the video, overall.

In a further breakdown of the analysis, the tool gave a 100 percent confidence score to the subcategory of “AI-generated audio detection”, and an 83 percent confidence score to “audio analysis”, the latter indicating that the audio could have been produced using an A.I. audio generator, thereby complementing the former confidence score.

The tool also gave a 99 percent confidence score to the subcategory of “face manipulation”, pointing to the probability that A.I. was used to tamper with the faces featured in this video.

To get further analysis on the audio, we also put it through the A.I. speech classifier of ElevenLabs, a company specialising in A.I. voice research and deployment. It returned results as “uncertain”, suggesting that the tool was not sure if the audio track used in the video was generated using their software.

Subsequently, we reached out to ElevenLabs to get a confirmation on the results from the classifier. They told us that they could not confirm that the audio was A.I.-generated neither could they confirm that it originated from their platform.

We also sought expert analysis from our partners at RIT’s DeFake Project to make sense of the oddities we noticed around the faces of the people featured in this video. Saniat Sohrawardi from the project stated that the video appears to be a lip-sync deepfake.

Mr. Sohrawardi mentioned that the same algorithm seems to have been used to manipulate all the faces in the video. He said given the quality of the video it is likely that speech2lip may have been used. He was referring to a high fidelity speech-to-lip generation code repository, accessible online, that helps in creating highly accurate lip-sync videos.

Sohrawardi noted that the mouth and the surrounding areas of the speakers seem to have been regenerated using an A.I. model to sync with the audio, the rest of the face for the subjects appears unaltered. The audio seems to have been generated, he added.

This explains the visual anomalies in the doctored video such as the microphone disappearing into Mukesh Ambani’s jawline when his mouth moved to speak.

We also escalated the video to our partner GetReal Labs, co-founded by Dr. Hany Farid and his team, they specialise in digital forensics and A.I. detection. They noted that each of the speakers in this video have clear lip-sync artifacts and that the speech is desynchronised from lips in multiple portions. Their analysis engine also flagged the audio as synthetic.

Based on our observations and expert analyses, we assessed that the video featuring the billionaire father-son duo, the cricketer, and the actor was fabricated using original footage and A.I.-generated audio to falsely link them to a dubious gaming application.

(Written by Debopriya Bhattacharya and Debraj Sarkar, edited by Pamposh Raina.)

Kindly Note: The manipulated audio/video files that we receive on our tipline are not embedded in our assessment reports because we do not intend to contribute to their virality.

You can read below the fact-checks related to this piece published by our partners:

गेमिंग ऐप का प्रचार करते मुकेश अंबानी समेत तमाम हस्तियों का फर्जी वीडियो वायरल

Fact Check : अंबानी परिवार का एविएटर ऐप का प्रमोशन वाला यह वीडियो डीपफेक है