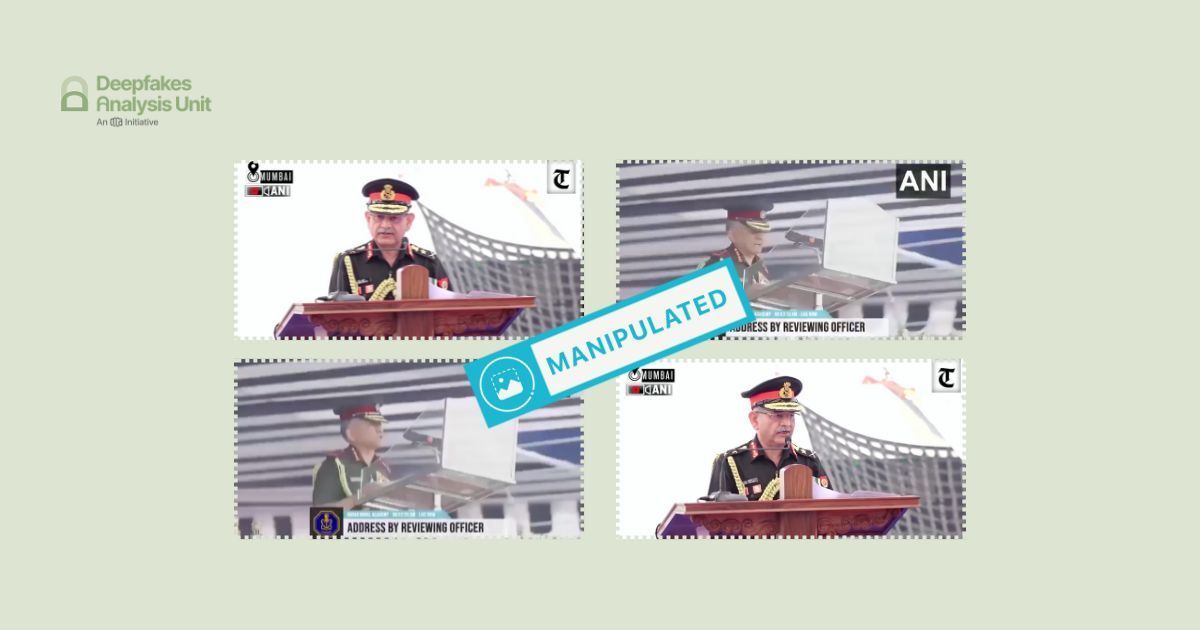

The Deepfakes Analysis Unit (DAU) analysed a video that apparently shows Gen. Anil Chauhan, India’s Chief of Defence Staff, supposedly making a statement about India having lost seven jets to Pakistan and asking for a ceasefire. After putting the video through A.I. detection tools and getting our expert partners to weigh in, we were able to conclude that his original video was fabricated with A.I.-generated audio.

The two-minute-and-28-second video in English was escalated to the DAU multiple times by its fact-checking partners for analysis. The video was posted from several accounts on X, formerly Twitter, although with different text.

One of the accounts posted the video on July 26 with accompanying text in Urdu, which loosely translates to: "Our 7 aircraft were destroyed. Yes we had requested a ceasefire because we did not want to escalate the war in the region. (Indian Chief of Defence Staff General Anil Chauhan)". The location information with the post, which has more than 44,000 views, suggests that the account belongs to someone from the Punjab province of Pakistan.

We do not have any evidence to suggest if the video originated from any of the accounts on X or elsewhere.

The fact-checking unit of the Press Information Bureau, which debunks misinformation related to the Indian government recently posted a fact-check from their verified handle on X, debunking the video attributed to General Chauhan.

In the video, the general is captured in a medium close-up. He appears to be standing at a podium with multiple microphones in front of him, making it seem that he is addressing a gathering. His hands move in an animated way. For a second he even appears to be pulling some sheets of papers from the lectern and putting them back quickly.

The backdrop primarily comprises a dark blue curtain along with a patch of black. A logo resembling that of ANI, an Indian news agency, is visible in the top right corner of the video frame, however, the alphabets in the logo look distorted.

The video quality is decent overall but there is a noticeable lag between the audio and video track making the general’s lip-sync appear inconsistent. In some frames, his dentition seems to disappear entirely while in others his teeth resemble an unnaturally bright white patch.

The general’s upper body appears to be notably stiff. His gaze remains fixed to the right for the most part even when he turns his head forward. His eyes look unusually shiny and blink rather slowly.

At the five-second mark in the video as the general lifts his hands, a wire-like object seems to sprout from his left thumb and a tiny disc-like object also surfaces from his right index finger almost disfiguring it. Both these mysterious objects disappear in a flash of a second. In certain frames, his knuckles and fingers seem to change shape, as does the lectern in front of him. At one point, the microphones appear to float without any visible base.

Some garbled characters are visible on his uniform in place of his name tag, and unidentifiable military regalia, that appears to change shape throughout the video, is visible on his epaulettes instead of the Indian national emblem.

The oddities highlighted in the visuals point to signs of possible digital tampering in the video.

On comparing the voice attributed to the general in the video with his recorded videos available online, similarities can be identified in the voice, accent, diction, and also his mellow tone. However, he seems to speak faster in real life. The overall delivery sounds flat, monotonous, and lacks variation in pitch. A persistent echo can be heard throughout the audio track, which lacks any ambience sound.

We undertook a reverse image search using screenshots from the video and traced the general’s clip to this video published on July 25, 2025 from the official YouTube channel of ANI News. His backdrop and clothing are identical in the video under review as well as the one we traced but for the nametag and the insignia on his uniform, which are clearly visible with the Indian Army insignia in the video we traced.

The wire-like and disc-shaped objects do not feature in the source video, which carries a logo of ANI in the top right corner of the video frame and that of YouTube in the bottom right corner. The rings visible on the middle finger of his right hand and the ring finger of his left hand in the source video are oddly missing from the doctored video.

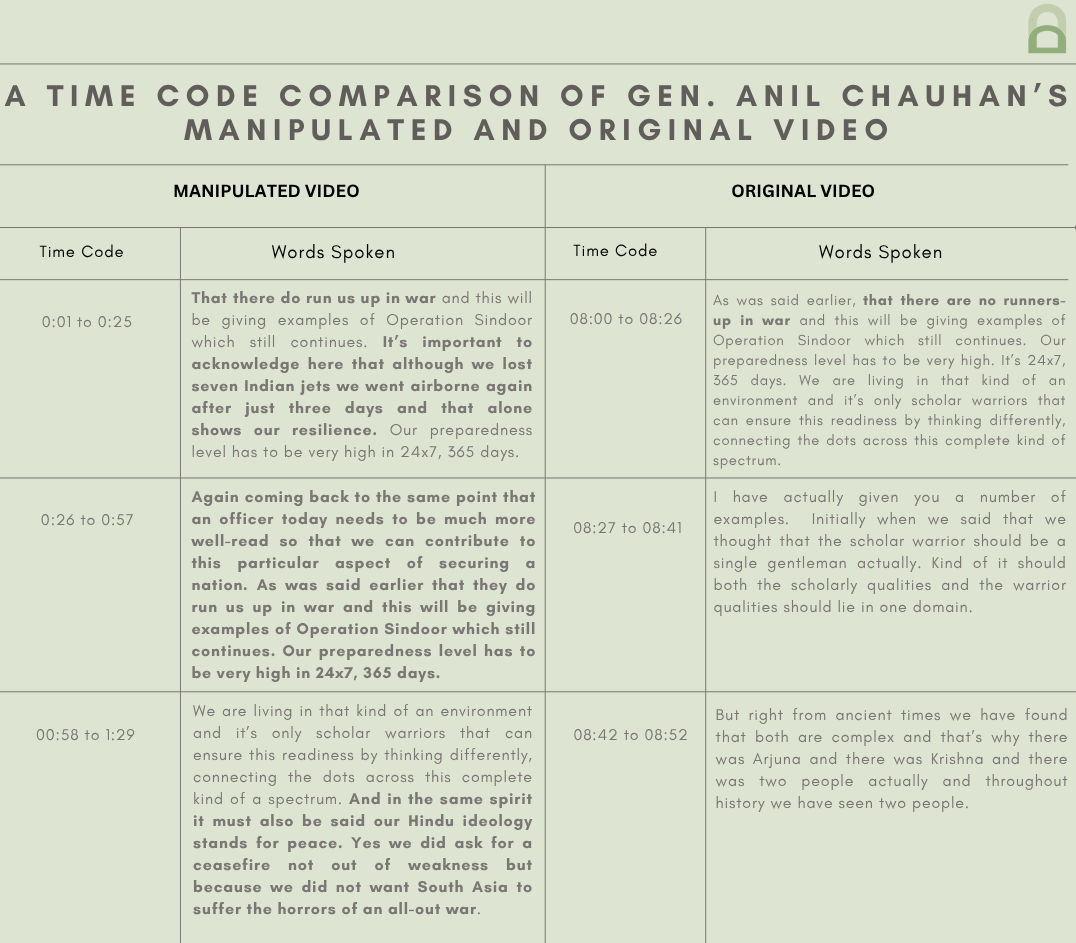

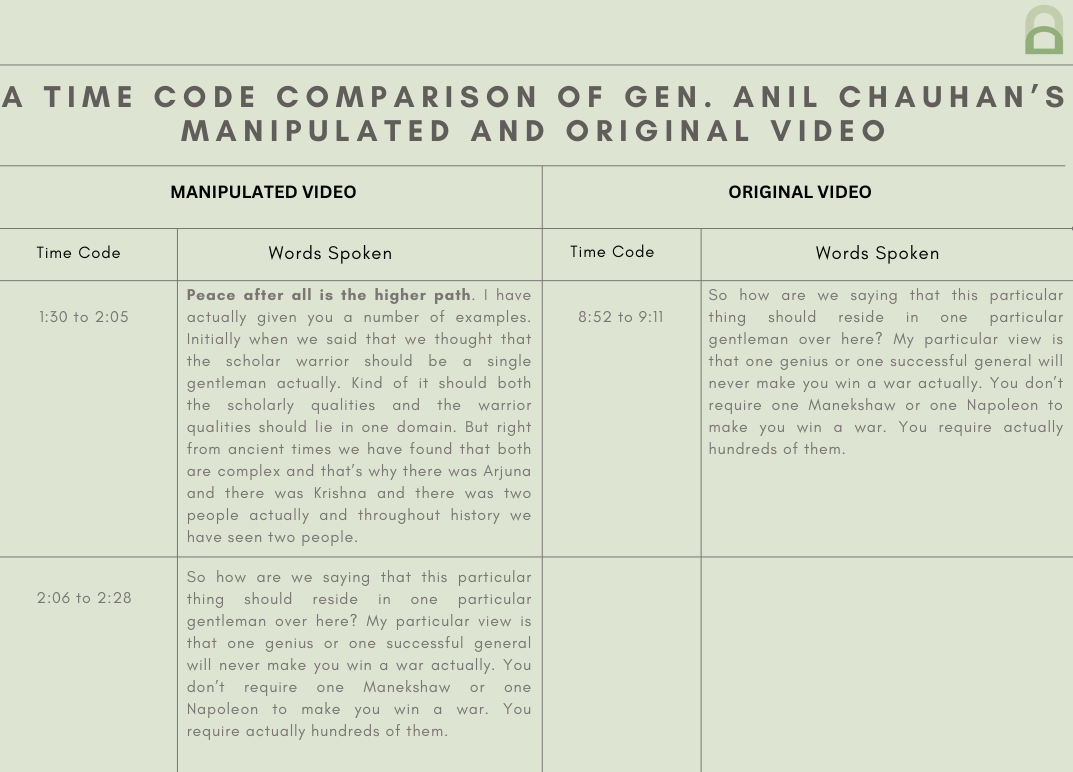

The audio in the source video is mostly in English with some bits in Hindi. The audio tracks in the source and doctored video are almost identical in terms of the content but for a few sentences. The doctored audio contains at least three sentences that cannot be heard in the original audio and also repeats a sentence that can only be heard once in the original.

However, the voices in the audio tracks are not identical and there is a noticeable static noise in the original video, which is missing from the doctored video.

The general’s body language is more relaxed in the source video with his eyes as well as his upper body moving left and right unlike that in the doctored video. When we compared the video tracks over the same audio tracks in the two videos we observed that the general’s body language and gestures are completely different. Despite this, the manipulated video shows no visible jump cuts or transitions and looks fairly seamless.

Our observations are indicative of the possibility that the manipulated video was not created by lifting the video segment with the audio track similar to the one heard in the original video.

We have noticed the emergence of a new trend wherein videos featuring top Indian ministers or army officials are used with synthetic audio to peddle misinformation about India losing military hardware to Pakistan during the recent military escalations. The DAU has debunked a few such videos, including one apparently featuring Dr. S. Jaishankar, India’s External Affairs Minister and another one with the visuals of Lt. Gen. Rahul Singh, India’s deputy army chief. In both the videos, the original audio tracks of the persons featured were spliced with A.I.-generated audio.

We have shared below a table that compares the audio tracks from the original video featuring the general with the doctored one to give our readers a sense of how false narratives are being spun. By no means do we want to give any oxygen to the bad actors behind this misleading and harmful video.

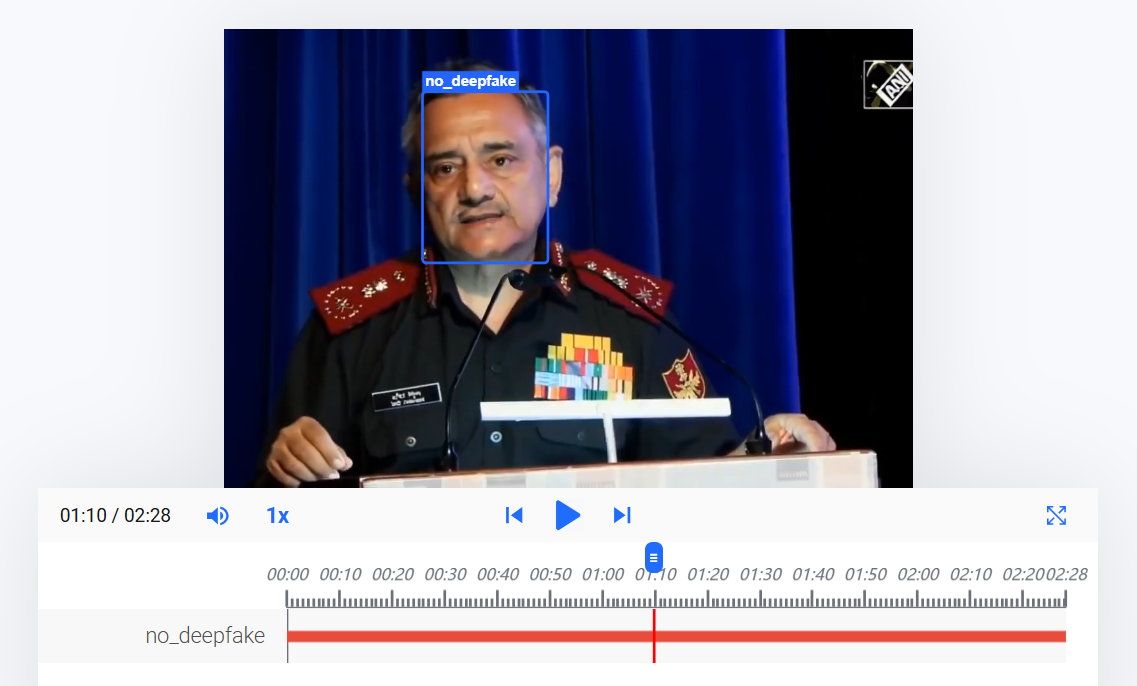

To discern the extent of A.I. manipulation in the video under review, we put it through A.I. detection tools.

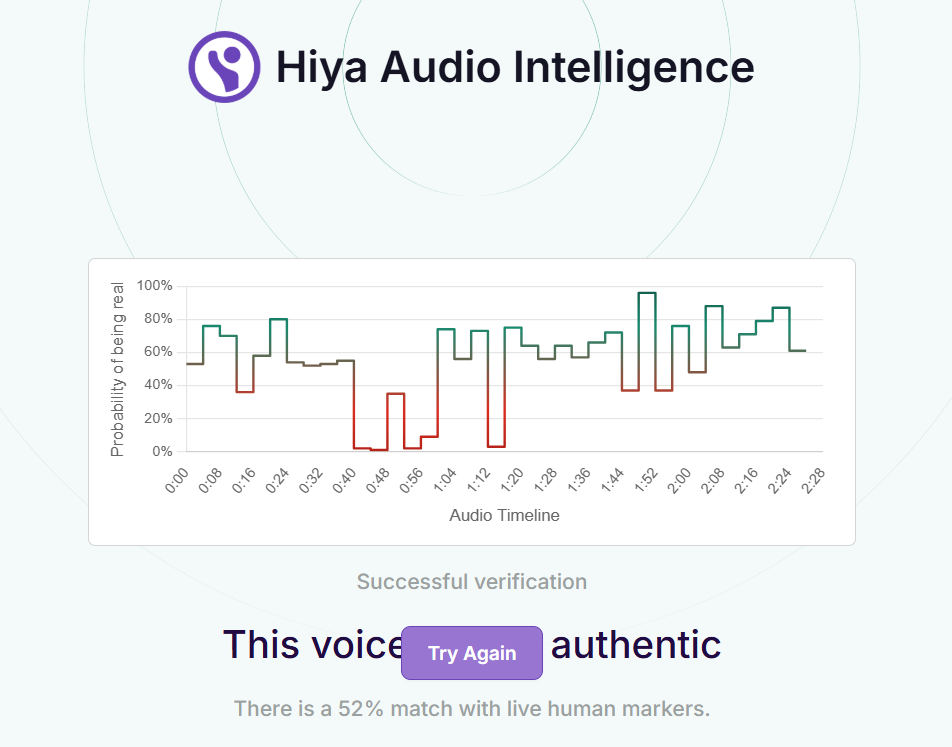

The voice tool of Hiya, a company that specialises in artificial intelligence solutions for voice safety, indicated that there is a 48 percent probability of the audio track in the video having been generated or modified using A.I.

Hive AI’s deepfake video detection tool did not highlight any markers of A.I. manipulation in the video track. Their audio detection tool, too, did not indicate that any portion of the audio track was A.I.-generated.

We also ran the audio track through the A.I. speech classifier of ElevenLabs, a company specialising in voice A.I. research and deployment. The results that returned indicated that it was “very unlikely” that the audio track used in the video was generated using their platform.

When we reached out to ElevenLabs for a comment on the analysis, they told us that they were unable to conclusively determine that the synthetic audio originated from their platform. They noted that the general has now been included in their safeguard systems to help prevent any future unauthorised cloning or misuse of his voice with ElevenLabs’ tools.

We also reached out to our partners at RIT’s DeFake Project to get expert analysis on the video. Saniat Sohrawardi and Kelly Wu from the project noted that the manipulation in this video is more apparent compared to some of the recent videos analysed by them such as this one.

Mr. Sohrawardi pointed to the clarity of the audio, stating that it is too unrealistic for a public address. He also added that the voice does not sound like that of the general.

Focusing on the video track, he mentioned that it has several visual artefacts that are typical of generated videos. He noted that the video was probably generated from a screenshot of the original video. Echoing our observations, he pointed to the distortion of the general’s nametag, emblems, and buttons throughout the video.

He further added that the general’s hands retain artefacts of the microphone, uniform, or watch, due to bad generation. And also highlighted a major artefact by pointing to a specific time code where the podium appears to fold into a file and then retracts back.

He suggested that these artefacts indicate a changing trend where image and audio are used in video generation tools to fabricate videos. He pointed to HeyGen, an A.I. video generator, as one of the platforms used to generate videos using images.

To get another expert to weigh in on the video, we escalated it to the Global Online Deepfake Detection System (GODDS), a detection system set up by Northwestern University’s Security & AI Lab (NSAIL). The video was analysed by two human analysts, run through 22 deepfake detection algorithms for video analysis, and seven deepfake detection algorithms for audio analysis.

Of the 22 predictive models, 14 gave a higher probability of the video being fake and the remaining eight gave a lower probability of the video being fake. Five of the seven predictive models gave a high confidence score to the audio being fake and the remaining two models gave a low confidence score to the audio being fake.

In their report, the team echoed our observations about the inconsistent lip-sync and the general’s purported voice lacking natural tonal and cadence variations characteristic of human voices. They also corroborated our observations about the general’s teeth seemingly changing shape and arrangement as he appears to speak.

They pointed to the general’s neck appearing significantly lighter in colour compared to his face. Focusing on the general’s mouth region, they highlighted segments where his teeth appear over his lips rather than behind them, resulting in an unnatural layering effect. In addition, they pointed to specific time codes where portions of the lower lip intersect the teeth and where lips appear to lose definition as they blur together.

According to their analysis, a watermark in the upper-right corner of the video frame could indicate video processing. In conclusion, the team stated that the video is likely generated via artificial intelligence.

On the basis of our findings and expert analyses, we can conclude that synthetic audio similar to that from an original video featuring the general was used to peddle a false narrative about India losing jets to Pakistan in the recent India-Pakistan conflict.

(Written by Debopriya Bhattacharya and Debraj Sarkar, edited by Pamposh Raina.)

Kindly Note: The manipulated audio/video files that we receive on our tipline are not embedded in our assessment reports because we do not intend to contribute to their virality.

You can read below the fact-checks related to this piece published by our partners:

Did Indian CDS Gen Anil Chauhan ‘Admit’ To Losing 7 Jets During Operation Sindoor?

Video Of CDS Gen Anil Chauhan Admitting 7 Jet Losses To Pakistan Is A Deepfake