The Deepfakes Analysis Unit (DAU) analysed two videos that apparently feature Mukesh Ambani, chairperson of Reliance Industries, promoting dubious financial investment programs. One of the videos also features Arnab Goswami, Republic TV editor-in-chief. After putting the videos through A.I. detection tools and getting our expert partners to weigh in, we were able to establish that both videos carry original footage that has been manipulated with synthetic audio.

Two Facebook links were escalated to the DAU tipline for assessment, each link led to a separate video with content in English. One of them is a three-minute-and-11-second video with Mr.Ambani and Mr.Goswami, and the other is a four-minute-and-41-second video with Ambani and three random men. Both videos seem to be peddling a get-rich-quick scheme.

The videos were posted from two different Facebook accounts on the same day. No other video was visible on the respective timelines associated with the accounts. Even though the videos are not accessible through those links anymore, the content may still be available on other platforms. We decided to address both videos together because of the similarity in their content as well as the subjects featured.

The video featuring Ambani and Goswami opens in a studio-like setting with Goswami apparently speaking to the camera. A male voice recorded over his visuals announces an investment project launched by Elon Musk in India, and claims that it has apparently benefitted the likes of Ambani. That voice states that the project has been launched to fight poverty, and that India will be the first country to benefit from it.

The next segment in that video shows Ambani speaking from what appears to be a public forum. The male voice recorded over his visuals is distinct from that recorded over Goswami’s visuals. The DAU debunked a video in which the same visuals of Ambani were used with synthetic audio to make it appear that he was endorsing a dubious gaming app, when he was not.

The voice recorded over Ambani’s visuals mentions that the supposed app, linked to an A.I.-driven algorithm, will give hefty returns to Indian citizens even on making a small investment and it could hedge them against rising inflation. It claims that Ambani has used the app himself to ensure its authenticity. The voice urges people to sign up for the app urgently using a link, which is not visible anywhere in the video though.

The second video featuring Ambani seems like a recording of an online video call with him in the main frame and three men in different frames arranged vertically along the left side. The video opens with him apparently speaking to the camera from an office-like setup; the male voice with his visuals talks about an A.I.-driven investment platform. No accompanying audio for the three other men can be heard in the video.

The male voice with Ambani’s visuals describes a supposed A.I.-driven investment platform and its benefits for Indian citizens. It suggests that some 100 people have been invited to participate in the program and anyone who is able to view this video should sign up immediately, yet again making a reference to a link which is not visible anywhere in the video though.

We would like to mention here that the script for the audio with this particular video is identical to that used in A.I.-manipulated financial scam videos featuring billionaire businessman Gautam Adani and former Indian Prime Minister Dr. Manmohan Singh. We debunked both videos and compared their audio scripts to highlight the copycat nature of the content.

The lip-sync is off the mark for both Ambani and Goswami in their respective frames in the two videos. Text graphics at the bottom of the frame in both videos promise participants a certain sum of money.

Quivering around the mouth region and inconsistent dentition can be noticed in Ambani’s visuals in both the videos. The voice attributed to him in the videos bears some similarity to his natural voice quality but sounds robotic and has an accent uncharacteristic of his delivery when compared with his recorded interviews and speeches.

In the video that features only Ambani, his skin tone around the forehead, right cheek, and right ear looks different than the rest of his face. His eye movements seem odd as he appears to be looking away from the camera while talking.

Goswami’s visuals seem to have puppet-like mouth movements with quivering lips. In some frames his teeth seem to disappear and his chin appears to be elongating. The voice with his visuals sounds somewhat like his but the accent sounds different and lacks his characteristic long pauses and high pitch, which can be heard in his recorded news shows.

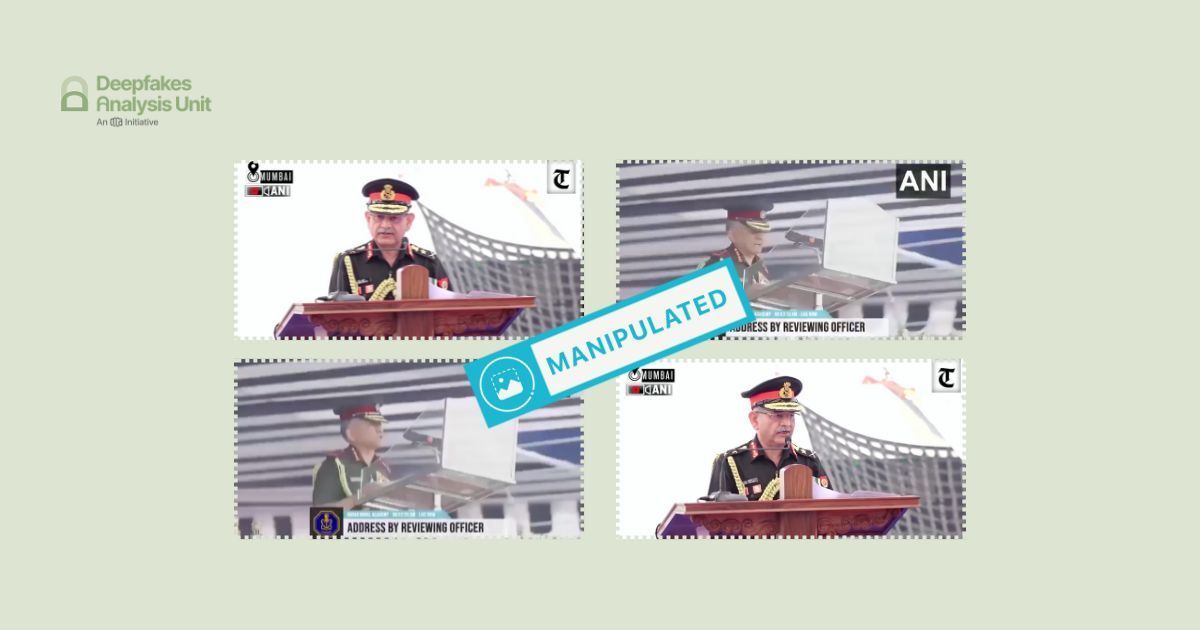

We undertook a reverse image search using screenshots from the videos under review to trace the origin of the clips interspersed in those.

For the video that features Goswami and Ambani, Goswami’s clip could be traced to this video published on Sep. 30, 2024 from the official YouTube channel of Republic World, an India-based English news channel. Ambani’s clip could be traced to his address at a summit; the video of the same was published on Jan.10, 2024 from the official YouTube channel of Business Standard, a business daily.

Ambani’s clip from the other video could be traced to this video published on Dec. 15, 2020 from the YouTube account that publishes updates from Reliance Industries. But the three men who also featured in the same clip with Ambani could not be seen anywhere in this video.

The clothing, backdrop, and body language of Ambani and Goswami in the two videos we reviewed and the videos we were able to locate are identical. The language in the original as well as the doctored video is English, however, their audio tracks are totally different; there is no mention of any investment scheme in the original videos.

The doctored videos seem to have used zoomed in frames from the original videos, cutting off the backdrop as well as the logos from the original video of Goswami’s as well as the one of Ambani’s at a summit. There was no logo in the other original video featuring Ambani. The text graphics in the doctored video are not part of any of the original videos.

To discern the extent of A.I.-manipulation in the videos under review, we put them through A.I.-detection tools.

The voice tool of Hiya, a company that specialises in artificial intelligence solutions for voice safety, indicated that there is a 96 percent probability that an A.I.-generated voice was used in the video featuring Ambani and Goswami.

For the other video, supposedly featuring only Ambani’s voice, the tool returned results indicating that there is a 99 percent probability of the audio track being A.I.-generated.

Hive AI’s deepfake video detection tool indicated that the video apparently featuring both Goswami and Ambani was indeed manipulated using A.I. It pointed out a few markers in the video but only on Ambani’s face.

For the other video featuring Ambani, the tool failed to trace any A.I. manipulation on Ambani’s face. However, it highlighted markers of A.I. manipulation on the face of one of the three men featured in that video, though none of the men can be heard speaking in the video.

Hive AI’s audio detection tool indicated that the audio track supposedly carrying Goswami’s and Ambani’s voice was manipulated with A.I. The tool found evidence of A.I. manipulation in all but the last 10 seconds of the audio being purported to be Ambani’s voice in the other video.

The deepfake detector of our partner TrueMedia suggested substantial evidence of manipulation in the video apparently featuring Ambani and Goswami. The “A.I.-generated insights” offered by the tool provide additional contextual analysis by stating that the video transcript reads like an online scam, making exaggerated claims with promotional language.

In a breakdown of the overall analysis, the tool gave a 59 percent confidence score to both “face manipulation detector” and “video facial analysis” subcategories. The former detects potential A.I. manipulation of faces, as in cases of faceswaps and facial reenactment, while the latter analyses video frames for unusual patterns in facial features.

The tool gave a 100 percent confidence score to the subcategories of “AI-generated audio detector” and “voice anti-spoofing analysis”, and a 96 percent confidence score to the subcategory of “audio authenticity detector”. All the subcategories analyse audio for evidence that it was created by an A.I.-audio generator or cloning.

The tool detected substantial evidence of manipulation for the other Ambani video as well. The “A.I.-generated insights” from the tool suggest that the video transcript resembles a scripted promotional message or scam, replete with overly polished language that promises unrealistic financial gains.

The tool gave a 74 percent confidence score to the subcategory of “face manipulation detector”; “video facial analysis” received a confidence score of 62 percent. The tool gave a 100 percent confidence score to the subcategories of “voice anti-spoofing analysis” as well as “A.I.-generated audio detector”; “audio authenticity detector” subcategory received a confidence score of 99 percent.

For a further analysis on the audio tracks from the two videos we put them through the A.I. speech classifier of ElevenLabs, a company specialising in voice A.I. research and deployment. In both cases, the classifier returned results as “very likely”, indicating that it is highly probable that the audio tracks in the two videos were generated using their software.

To get another expert analysis on the videos, we escalated them to the Global Deepfake Detection System (GODDS), a detection system set up by Northwestern University’s Security & AI Lab (NSAIL).

For the video featuring Goswami and Ambani, the team used 15 deepfake detection algorithms and two human analysts to review the media. Of the 15 predictive models used by them, six models gave a higher probability of the video being fake, while the nine remaining models indicated a lower probability of the video being fake.

The team noted in their report that the jaws of both the subjects appear to move asynchronously with the rest of the face at numerous points in the video. They further added that in some frames featuring Ambani the shape of his mouth seems distorted. And his teeth and mouth appear misshapen, especially when parts of the mouth are covered by the microphone.

Their analysts also spotted frames in the video where a blank white screen flashes, which they believe has been inserted into the video and could be an indicator of the video being inauthentic.

For the video featuring Ambani, the detection system used 22 deepfake detection algorithms and two human analysts. Of the 22 models, seven models gave a higher probability of the video being fake, while the remaining 15 models indicated a lower probability of the video being fake.

The team’s observations on the audio track in the video corroborated our analysis. In their report they noted that the video quality is extremely blurry, which as per them is a commonly used tactic to hide content manipulations. They further added that the blurry appearance can make aspects of a subject’s face, such as Ambani’s ear in this case, disappear into the surrounding environment.

They noticed instances of visual manipulation where the video freezes while the audio track continues and then the video track restarts but lacks continuity with the previous frame. They also observed that the mouth and speech of Ambani did not align in the video, which contributed to an unnatural appearance.

In the overall verdict, the GODDS team concluded that both the videos are likely fake and generated or manipulated via artificial intelligence.

To get another expert view on the manipulations in the videos, we reached out to our partners at RIT’s DeFake Project. Saniat Sohrawardi from the project identified the video with Ambani and Goswami as a lip-sync deepfake scam that seems to have used wav2lip — a speech-to-lip generation code repository available online — for the video generation.

Mr. Sohrawadi mentioned that the video has several synchronisation issues. He noted that those could be a result of the manner in which the mouth movements of the subjects were generated and added to the video. He mentioned that the mouth movements of the source used as the basis for the desired manipulation seems to have been imperfectly transferred onto the subjects in the video track.

Kelly Wu from the project commented on the second video which features only Ambani. She highlighted that one sentence in Ambani’s purported audio track seems to convey that the supposed project is a limited offer deal, which as per her is a very common narrative in fake videos.

Ms.Wu pointed to a similar scam video which the RIT team had analysed for the DAU, suggesting that the audio in that video opens the same way as Ambani’s purported audio opens in this video. She noted that there is a possibility that the same group is making these deceptive videos.

On the basis of our findings and analyses from experts, we can conclude that original footage was used with synthetic audio to fabricate both videos. There seems to be a continued attempt to falsely link supposed financial schemes to businessmen and anchors, such as Ambani and Goswami in this case, to scam people.

(Written by Debopriya Bhattacharya and Debraj Sarkar, edited by Pamposh Raina.)

Kindly Note: The manipulated video/audio files that we receive on our tipline are not embedded in our assessment reports because we do not intend to contribute to their virality.