The Deepfakes Analysis Unit (DAU) analysed a video that features Sudhir Chaudhary, anchor with a Hindi news channel, and Dr. Naresh Trehan, a cardiac surgeon, apparently promoting a supposed government-endorsed cure for prostatitis. However, a statement issued by the Medanta group, of which Dr. Trehan is the chairman, noted that A.I. voice was used in the video as a false representation of the doctor’s voice. After putting the video through A.I. detection tools and getting experts to weigh in, we were able to conclude that synthetic audio was used to fabricate the video.

The two-minute-and-16-second video in Hindi was escalated to the DAU by a fact-checking partner for analysis. The video opens with Mr. Chaudhary speaking to the camera in a studio-like setting. A male voice recorded over his visuals announces a supposed crackdown on pharmaceutical companies and urologists withholding the ultimate cure for prostatitis — an ailment involving the prostate gland. And that voice goes on to say that the government has launched a national program led by Trehan to treat prostatitis.

A logo resembling that of Aaj Tak, a Hindi news channel, is visible in the top right corner of the video frame. Supers in Hindi along the top of the video frame compliment the video content while captions in Hindi at the bottom offer a transcript of the accompanying audio.

The second segment in the video shows Trehan speaking to the camera from a setting that has framed certificates in the backdrop. A male voice accompanying his clip claims that the supposed cure being promoted by the government can be easily concocted at home using natural ingredients and guarantees results in 16 days.

That voice further adds that some 42,000 men have been cured so far. It goes on to say that due to pharma backlash the official website associated with the supposed program is often blocked. The video abruptly ends with the same voice urging people to click on some button to access a link; neither the button nor the link is visible in the video.

In the video, Chaudhary’s visuals span barely 5 seconds after which the male voice recorded over his visuals can be heard over unrelated visuals including that of a man in police uniform. Trehan’s segment as well is interspersed with several cutaways of men in varied settings, including outdoors as well as laboratory and hospital settings. Most men featured look like westerners. A fleeting A.I.-generated image of an elderly couple playing chess also flashes in the video several times.

Trehan’s face is more in-focus compared to Chaudhary’s. For the most part, the video clips of each of them are out-of-sync with their respective audio tracks. Trehan’s chin appears to extend beyond its natural contours when his lips move to talk, and in some instances his moustache appears to move oddly as well; his teeth seem to change shape and his cheeks appear unnaturally stiff. Since Chaudhary’s clip is very short, much detail could not be picked up from there except that his chin area seemed a bit blurred.

The voice and accent being attributed to Chaudhary bear a striking resemblance to his actual voice and natural accent. But, the pauses usually heard in his news shows as well as recordings available online are missing from the delivery. The audio associated with Trehan sounds somewhat like him when compared with his recorded interviews, however, it has a hastened and nasal quality to it. The pauses in the track are followed by a subtle whistling noise, which could be an attempt to mimic human breathing. The audio sounds more scripted and synthesised than natural.

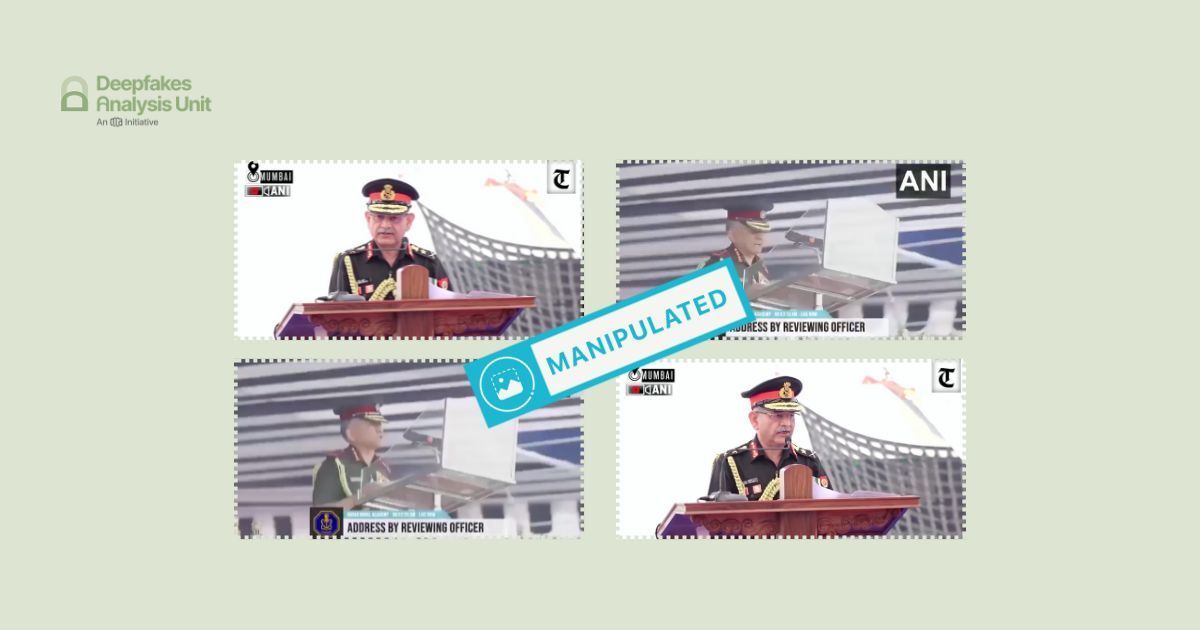

A reverse image search using screenshots from the video led us to two different sources and helped establish that the video we reviewed is composed of unrelated video clips that have been spliced.

Chaudhary’s segment led us to this video published from the official YouTube channel of Aaj Tak on Oct. 18, 2024. Trehan’s segment led us to a video embedded with this post published on Jan. 16, 2021 from Medanta’s official X handle. None of these videos mention anything about a cure for prostatitis.

The clothing, backdrop, and body language of Chaudhary and Trehan in these two videos match exactly with their respective clips in the doctored video. The supers and captions visible in the doctored video are not visible in the original videos. The logo of Medanta can be seen in Trehan’s original video; the Aaj Tak logo in the original video is different from the logo superimposed on the doctored video.

The language of conversation for Chaudhary is Hindi in the original as well as the doctored version but Trehan speaks in English in the original video. Random visuals of men that feature in the manipulated video could not be found in any of the original videos, some of them could be traced to websites featuring stock footage.

To discern the extent of A.I.-manipulation in the video under review, we put it through A.I. detection tools.

The voice tool of Hiya, a company that specialises in artificial intelligence solutions for voice safety returned results indicating that there is a 99 percent probability that an A.I.-generated audio track was used in the video.

Hive AI’s deepfake video detection tool indicated that the video was manipulated using A.I. It pointed out markers in multiple frames in the segment featuring Trehan. Their audio tool detected A.I.-manipulation throughout the audio track.

The deepfake detector of our partner TrueMedia indicated substantial evidence of manipulation in the video. The “A.I.-generated insights” offered by the tool provide additional contextual analysis by stating that the audio transcript reads like a promotional pitch and resembles an advertisement or scam rather than a genuine news report. Analysis from a human analyst further states that the lips move in weird and jumpy ways, indicating that the video is fake.

In a breakdown of the overall analysis, the tool gave a 93 percent confidence score to the “face manipulation detector” subcategory, which detects potential A.I. manipulation of faces, as in the case of face swaps and face reenactment.

The tool gave a 62 percent confidence score to the “video facial analysis” subcategory, which analyses video frames for unusual patterns and discrepancies in facial features. It also gave a 9 percent confidence score to the “deepfake face detector” subcategory, which uses a visual detection model to detect faces and check to see if they are deepfakes.

The tool gave a 100 percent confidence score to both “voice anti-spoofing analysis” and “AI-generated audio detector” subcategories, which detect A.I.-generated audio. The tool also gave a 99 percent confidence score to the “audio authenticity detector” subcategory which analyses audio for evidence that it was created by an A.I. generator or cloning.

We reached out to ElevenLabs, a company specialising in voice A.I. research and deployment, for an expert analysis on the audio. They told us that they were able to confirm that the audio is synthetic, implying that it was generated using A.I. The company has been actively identifying and blocking attempts of their tools being misused for generating prohibited content; and has taken steps to address this instance of misuse reported by us.

To get another expert to weigh in on the video, we reached out to our partners at RIT’s DeFake Project. Kelly Wu from the project stated that there were some artefacts around the doctor’s mouth region, particularly the moustache. She pointed to the flickering of Trehan’s moustache as his lips moved to speak, suggesting it to be an indication that the mouth region may be generated.

Ms. Wu also pointed to an instance in the video where Trehan’s lower chin has a very sudden enlargement, raising the alert that it could have been caused because of the manipulation of the lip-sync.

Saniat Sohrawardi from the project assessed that the video is a lip-sync deepfake. He pointed to two artifacts, “toading” and glitching of the face, which he said were results of misalignment during face extraction and eventual replacement. By toading he refers to a visual artefact that makes the throat area inflated, resembling the breathing of a toad.

Mr. Sohrawardi suggested that some scenes in the video contain synthetic video clips, which could have been created through a process of synthetic image generation followed by image-to-video conversion using tools such as Runway and Luma Dream Machine. Or these tools could have been used to generate the video using a full prompt. His analysis was based on the quality of depth of field, speed of motion, and the short length of the clips present in the video.

On the basis of our findings and analysis from experts, we can conclude that the video of Chaudhary and Trehan has been manipulated with synthetic audio to fabricate a narrative about a cure for prostatitis.

In recent months the DAU has debunked several videos that have misused original footage featuring prominent doctors including Ronni Gamzu, Rahil Chaudhary, and Bimal Chhajer to promote health misinformation.

(Written by Debraj Sarkar and Debopriya Bhattacharya, edited by Pamposh Raina.)

Kindly Note: The manipulated audio/video files that we receive on our tipline are not embedded in our assessment reports because we do not intend to contribute to their virality.

You can read below the fact-checks related to this piece published by our partners:

Fact Check: प्रोस्टेटाइटिस के इलाज का प्रचार करते सुधीर चौधरी और नरेश त्रेहन का यह वीडियो डीपफेक है

Fact Check: Are celebrities endorsing a magical cure for prostatitis?